I like to critique intellectual history—more the armchair type than the academic varietal—because it often reflects the open biases of the commentator rather than having been developed by a neutral analysis of the past and its relationship to our pending futures. So when I read about Husserl’s ideas of geometry and Galileo or the relative merits of Adam Smith’s The Theory of Moral Sentiments, I leave the critical analysis to the experts, equipped as they are with the proper academic insights. But columnists are not generally subject-matter experts, just enthusiasts who are driven by profession to justify their insights and prognostications by reference to history and ideas. And they substitute biases for depth far too often. This is true again today, with Leighton Woodhouse’s op-ed in The New York Times, “Donald Trump: Pagan King.” It begins gently enough, with a quote by Canada’s Mark Carney from Thucydides about power in international relations, but then quickly dissolves into an unsupportable argument about how Christianity was uniquely civilizing of the pagan world by introducing all the positive morals that we associate with the gentler, humbler aspects of the religion, mostly on the back of a single source.

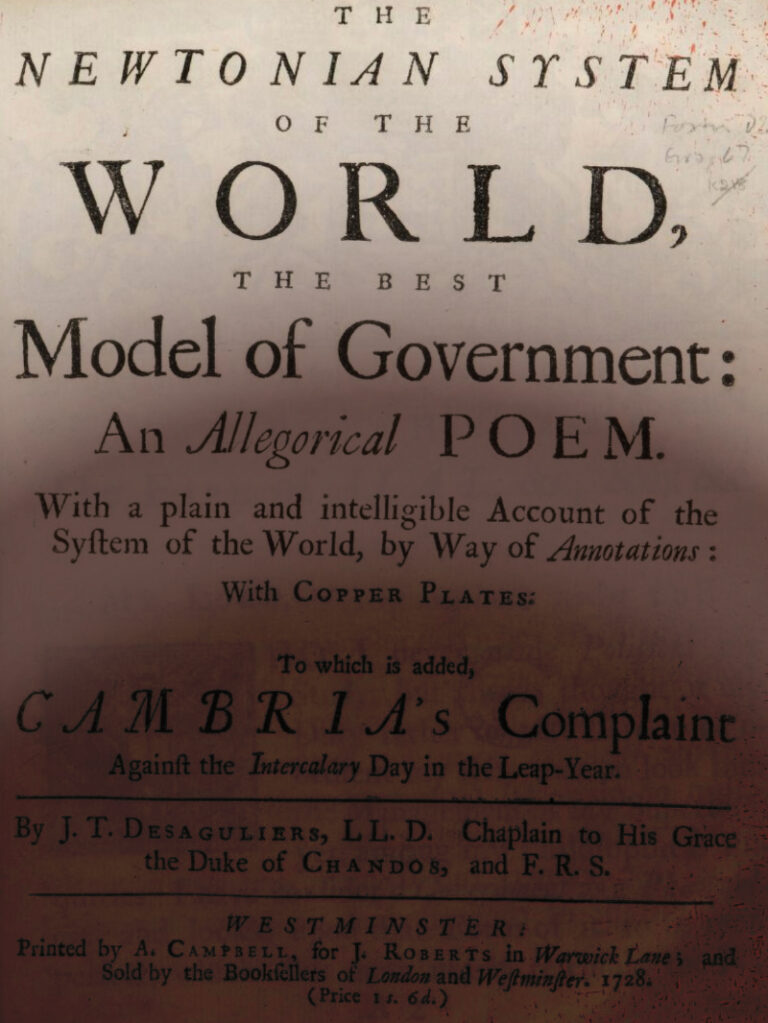

This through line is embarrassed by the theology, history, and facts of how Western civilization matured, as I and hundreds of other commentators pointed out (this chestnut is getting repetitive, too). This is not meant to rob Christianity of its influence in the last two thousand years, just to temper it responsibly and note that however one scopes “paganism,” Enlightenment rationality, high and low, shared aspects of pagan forms of thinking, and was clearly influenced by it as we move into the formative phase of the modern state period and formulations of democracy.… Read the rest