There is a curious dilemma that pervades much machine learning research. The solutions that we are trying to devise are supposed to minimize behavioral error by formulating the best possible model (or collection of competing models). This is also the assumption of evolutionary optimization, whether natural or artificial: optimality is the key to efficiently outcompeting alternative structures, alternative alleles, and alternative conceptual models. The dilemma is whether such optimality is applicable to the notoriously error prone, conceptual flexible, and inefficient reasoning of people. In other words, is machine learning at all like human learning?

There is a curious dilemma that pervades much machine learning research. The solutions that we are trying to devise are supposed to minimize behavioral error by formulating the best possible model (or collection of competing models). This is also the assumption of evolutionary optimization, whether natural or artificial: optimality is the key to efficiently outcompeting alternative structures, alternative alleles, and alternative conceptual models. The dilemma is whether such optimality is applicable to the notoriously error prone, conceptual flexible, and inefficient reasoning of people. In other words, is machine learning at all like human learning?

I came across a paper called “Multi-Armed Bandit Bayesian Decision Making” while trying to understand what Ted Dunning is planning to talk about at the Big Data Science Meetup at SGI in Fremont, CA a week from Saturday (I’ll be talking, as well) that has a remarkable admission concerning this point:

Human behaviour is after all heavily influenced by emotions, values, culture and genetics; as agents operating in a decentralised system humans are notoriously bad at coordination. It is this fact that motivates us to develop systems that do coordinate well and that operate outside the realms of emotional biasing. We use Bayesian Probability Theory to build these systems specifically because we regard it as common sense expressed mathematically, or rather `the right thing to do’.

The authors continue on to suggest that therefore such systems should instead be seen as corrective assistants for the limitations of human cognitive processes! Machines can put the rational back into reasoned decision-making. But that is really not what machine learning is used for today. Instead, machine learning is used where human decision-making processes are unavailable due to the physical limitations of including humans “in the loop,” or the scale of the data involved, or the tediousness of the tasks at hand.… Read the rest

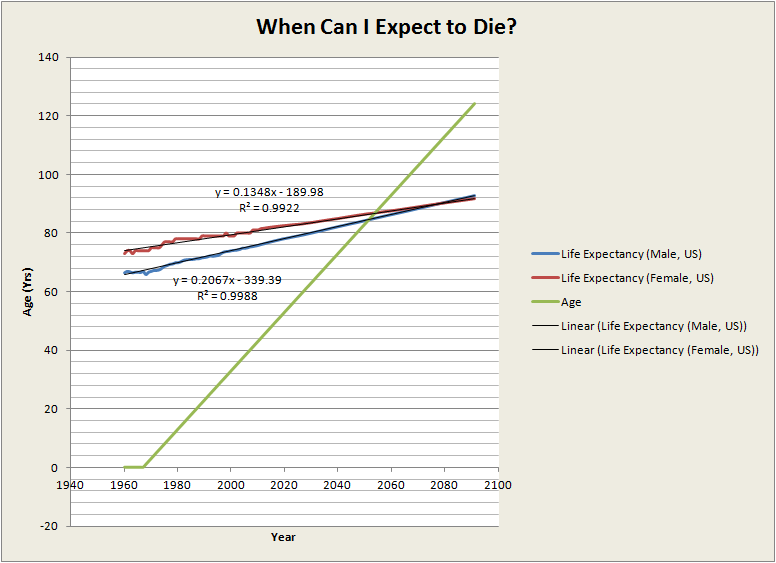

I spent a few minutes calculating my expected date of death today since I just turned 45. It turns out that I am beyond the half-way point in this journey. I’ve created a spreadsheet that you can use to calculate your own demise and produce charts like the one above. The current spreadsheet uses the US Male/Female mortality numbers from the World Bank dataset and then a linear regression for future gains in life expectancy. Other country sets can be easily incorporated.

I spent a few minutes calculating my expected date of death today since I just turned 45. It turns out that I am beyond the half-way point in this journey. I’ve created a spreadsheet that you can use to calculate your own demise and produce charts like the one above. The current spreadsheet uses the US Male/Female mortality numbers from the World Bank dataset and then a linear regression for future gains in life expectancy. Other country sets can be easily incorporated.