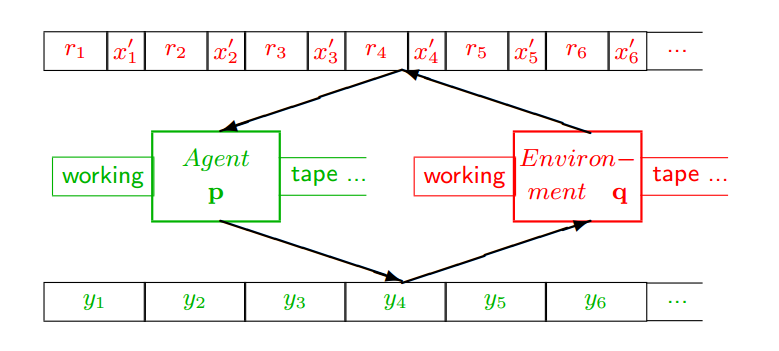

Continuing to develop the idea that social reasoning adds to Hutter’s Universal Artificial Intelligence model, below is his basic layout for agents and environments:

A few definitions: The Agent (p) is a Turing machine that consists of a working tape and an algorithm that can move the tape left or right, read a symbol from the tape, write a symbol to the tape, and transition through a finite number of internal states as held in a table. That is all that is needed to be a Turing machine and Turing machines can compute like our every day notion of a computer. Formally, there are bounds to what they can compute (for instance, whether any given program consisting of the symbols on the tape will stop at some point or will run forever without stopping (this is the so-called “halting problem“). But it suffices to think of the Turing machine as a general-purpose logical machine in that all of its outputs are determined by a sequence of state changes that follow from the sequence of inputs and transformations expressed in the state table. There is no magic here.

Hutter then couples the agent to a representation of the environment, also expressed by a Turing machine (after all, the environment is likely deterministic), and has the output symbols of the agent consumed by the environment (y) which, in turn, outputs the results of the agent’s interaction with it as a series of rewards (r) and environment signals (x), that are consumed by agent once again.

Where this gets interesting is that the agent is trying to maximize the reward signal which implies that the combined predictive model must convert all the history accumulated at one point in time into an optimal predictor. This is accomplished by minimizing the behavioral error and behavioral error is best minimized by choosing the shortest program that also predicts the history. By doing so, you simultaneously reduce the brittleness of the model to future changes in the environment.

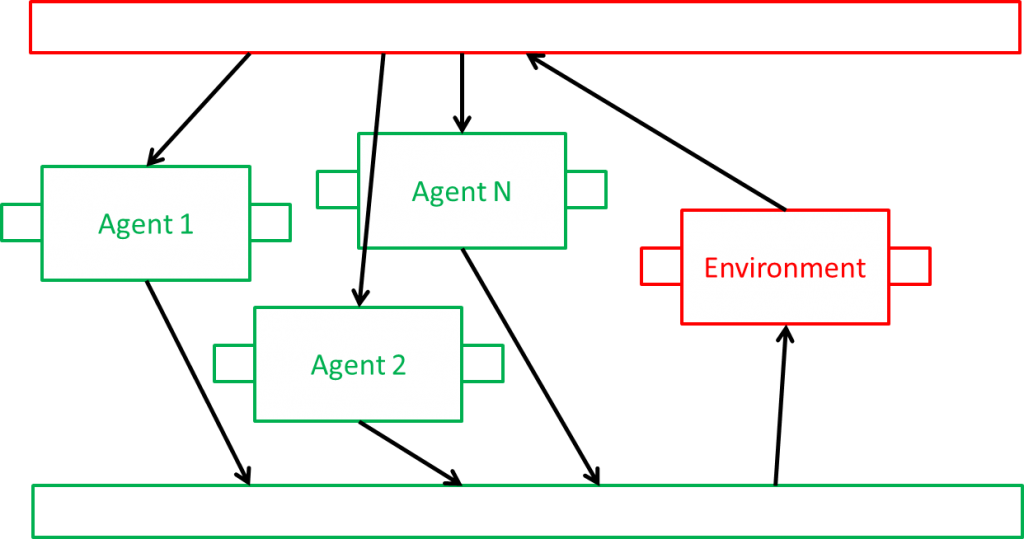

So far, so good. But this is just one agent coupled to the environment. If we have two agents competing against one another, we can treat each as the environment for the other and the mathematics is largely unchanged (see Hutter, pp. 36-37 for the treatment of strategic games via Nash equilibria and minimax). However, for non-competitive multi-agent simulations operating against the same environment there is a unique opportunity if the agents are sampling different parts of the environmental signal. So, let’s change the model to look as follows:

Now, each agent is sampling different parts of the output symbols generated by the environment (as well as the utility score, r). We assume that there is a rate difference between the agents input symbols and the environmental output symbols, but this is not particularly hard to model: as part of the input process, the agents’ state table just passes over N symbols, where N is the number of the agent, for instance. The resulting agents will still be Hutter-optimal with regard to the symbol sequences that they do process, and will generate outputs over time that maximize the additive utility of the reward signal, but they are no longer each maximizing the complete signal. Indeed, the relative quality of the individual agents is directly proportional to the quantity of the input symbol stream that they can consume.

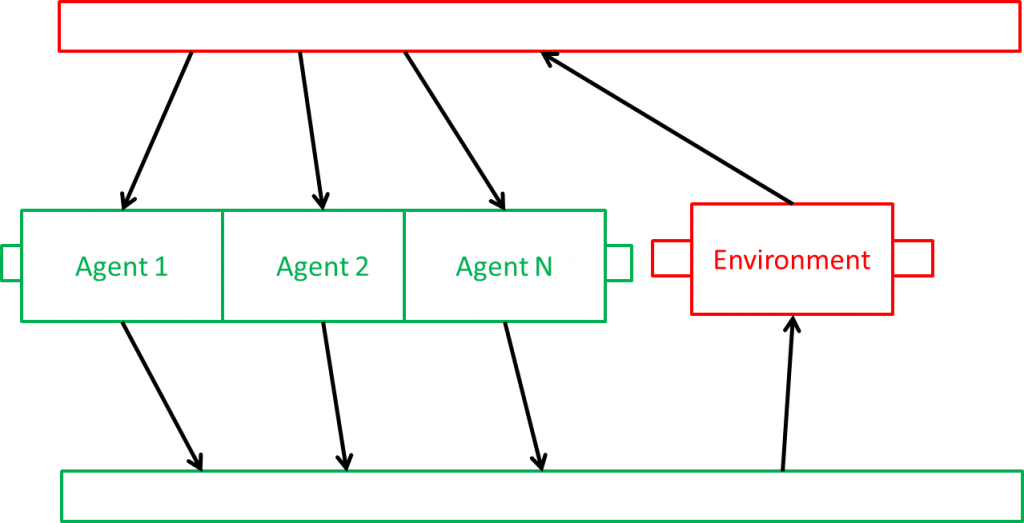

Overcoming this limitation has an obvious fix: share the working tape between the individual agents:

Then, each agent can record not only their states on the tape, but can also consume the states of the other agents. The tape becomes an extended memory or a shared theory about the environment. Formally, I don’t believe there is any difference between this and the single agent model because, like multihead Turing machines, the sequence of moves and actions can be collapsed to a single table and a single tape insofar as the entire environmental signal is available to all of the agents (or their concatenated form). Instead the value lies in consideration of what a multi-agent system implies concerning shared meaning and the value of coordination: for any real environment, perfect coupling between a single agent and that environment is an unrealistic simplification. And shared communication and shared modeling translates into an expansion of the individual agent’s model of the universe into greater individual reward and, as a side-effect, group reward as well.