Compliments of a discovery by Futurism, the paper The Autodidactic Universe by a smorgasbord of contemporary science and technology thinkers caught my attention for several reasons. First was Jaron Lanier as a co-author. I knew Jaron’s dad, Ellery, when I was a researcher at NMSU’s now defunct Computing Research Laboratory. Ellery had returned to school to get his psychology PhD during retirement. In an odd coincidence, my brother had also rented a trailer next to the geodesic dome Jaron helped design and Ellery lived after my brother became emancipated in his teens. Ellery may have been his landlord, but I am not certain of that.

Compliments of a discovery by Futurism, the paper The Autodidactic Universe by a smorgasbord of contemporary science and technology thinkers caught my attention for several reasons. First was Jaron Lanier as a co-author. I knew Jaron’s dad, Ellery, when I was a researcher at NMSU’s now defunct Computing Research Laboratory. Ellery had returned to school to get his psychology PhD during retirement. In an odd coincidence, my brother had also rented a trailer next to the geodesic dome Jaron helped design and Ellery lived after my brother became emancipated in his teens. Ellery may have been his landlord, but I am not certain of that.

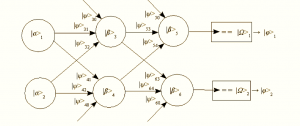

The paper is an odd piece of kit that I read over two days in fits and spurts with intervening power lifting interludes (I recently maxed out my Bowflex and am considering next steps!). It initially has the feel of physicists trying to reach into machine learning as if the domain specialists clearly missed something that the hardcore physical scientists have known all along. But that concern dissipated fairly quickly and the paper settled into showing isomorphisms between various physical theories and the state evolution of neural networks. OK, no big deal. Perhaps they were taken by the realization that the mathematics of tensors was a useful way to describe network matrices and gradient descent learning. They then riffed on that and looked at the broader similarities between the temporal evolution of learning and quantum field theory, approaches to quantum gravity, and cosmological ideas.

The paper, being a smorgasbord, then investigates the time evolution of graphs using a lens of graph theory. The core realization, as I gleaned it, is that there are more complex graphs (visually as well as based on the diversity of connectivity within the graph) and pointlessly uniform or empty ones.… Read the rest