Following Bill Joy’s concerns over the future world of nanotechnology, biological engineering, and robotics in 2000’s Why the Future Doesn’t Need Us, it has become fashionable to worry over “existential threats” to humanity. Nuclear power and weapons used to be dreadful enough, and clearly remain in the top five, but these rapidly developing technologies, asteroids, and global climate change have joined Oppenheimer’s misquoted “destroyer of all things” in portending our doom. Here’s Max Tegmark, Stephen Hawking, and others in Huffington Post warning again about artificial intelligence:

Following Bill Joy’s concerns over the future world of nanotechnology, biological engineering, and robotics in 2000’s Why the Future Doesn’t Need Us, it has become fashionable to worry over “existential threats” to humanity. Nuclear power and weapons used to be dreadful enough, and clearly remain in the top five, but these rapidly developing technologies, asteroids, and global climate change have joined Oppenheimer’s misquoted “destroyer of all things” in portending our doom. Here’s Max Tegmark, Stephen Hawking, and others in Huffington Post warning again about artificial intelligence:

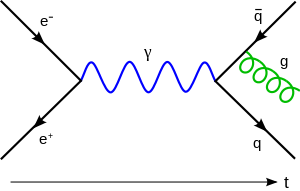

One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.

I almost always begin my public talks on Big Data and intelligent systems with a presentation on industrial revolutions that progresses through Robert Gordon’s phases and then highlights Paul Krugman’s argument that Big Data and the intelligent systems improvements we are seeing potentially represent a next industrial revolution. I am usually less enthusiastic about the timeline than nonspecialists, but after giving a talk at PASS Business Analytics Friday in San Jose, I stuck around to listen in on a highly technical talk concerning statistical regularization and deep learning and I found myself enthused about the topic once again. Deep learning is using artificial neural networks to classify information, but is distinct from traditional ANNs in that the systems are pre-trained using auto-encoders to have a general knowledge about the data domain. To be clear, though, most of the problems that have been tackled are “subsymbolic” for image recognition and speech problems.… Read the rest