Kalev Leetaru at UIUC highlights the use of sentiment analysis to retrospectively predict the Arab Spring using Big Data in this paper. Dr. Leetaru took English transcriptions of Egyptian press sources and looked at aggregate measures of positive and negative sentiment terminology. Sentiment terminology is fairly simple in this case, consisting of positive and negative adjectives primarily, but could be more discriminating by checking for negative modifiers (“not happy,” “less than happy,” etc.). Leetaru points out some of the other follies that can arise from semi-intelligent broad measures like this one applied too liberally:

Kalev Leetaru at UIUC highlights the use of sentiment analysis to retrospectively predict the Arab Spring using Big Data in this paper. Dr. Leetaru took English transcriptions of Egyptian press sources and looked at aggregate measures of positive and negative sentiment terminology. Sentiment terminology is fairly simple in this case, consisting of positive and negative adjectives primarily, but could be more discriminating by checking for negative modifiers (“not happy,” “less than happy,” etc.). Leetaru points out some of the other follies that can arise from semi-intelligent broad measures like this one applied too liberally:

It is important to note that computer–based tone scores capture only the overall language used in a news article, which is a combination of both factual events and their framing by the reporter. A classic example of this is a college football game: the hometown papers of both teams will report the same facts about the game, but the winning team’s paper will likely cast the game as a positive outcome, while the losing team’s paper will have a more negative take on the game, yielding insight into their respective views towards it.

This is an old issue in computational linguistics. In the “pragmatics” of automatic machine translation, for example, the classic example is how do you translate fighters in a rebellion. They could be anything from “terrorists” to “freedom fighters,” depending on the perspective of the translator and the original writer.

In Leetaru’s work, the end result was an unusually high churn of negative-going sentiment as the events of the Egyptian revolution unfolded.

But is it repeatable or generalizable? I’m skeptical. The rise of social media, enhanced government suppression of the media, spamming, disinformation, rapid technological change, distributed availability of technology, and the evolving government understanding of social dynamics can all significantly smear-out the priors associated with the positive signal relative to the indeterminacy of the messaging.… Read the rest

Decompressing in NorCal following a vibrant Hadoop World. More press mentions:

Decompressing in NorCal following a vibrant Hadoop World. More press mentions:

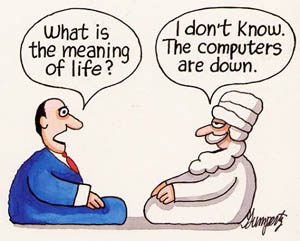

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “