I’m unusually behind in my postings due to travel. I’ve been prepping for and now deep inside a fresh pass through New Zealand after two years away. The complexity of the place seems to have a certain draw for me that has lured me back, yet again, to backcountry tramping amongst the volcanoes and glaciers, and to leasurely beachfront restaurants painted with eruptions of summer flowers fueled by the regular rains.

I’m unusually behind in my postings due to travel. I’ve been prepping for and now deep inside a fresh pass through New Zealand after two years away. The complexity of the place seems to have a certain draw for me that has lured me back, yet again, to backcountry tramping amongst the volcanoes and glaciers, and to leasurely beachfront restaurants painted with eruptions of summer flowers fueled by the regular rains.

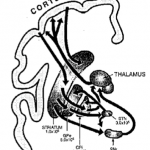

I recently wrote a technical proposal that rounded up a number of the most recent advances in deep learning neural networks. In each case, like with Google’s transformer architecture, there is a modest enhancement that is based on a realization of a deficit in the performance of one of two broad types of networks, recurrent and convolutional.

An old question is whether we learn anything about human cognition if we just simulate it using some kind of automatically learning mechanism. That is, if we use a model acquired through some kind of supervised or unsupervised learning, can we say we know anything about the original mind and its processes?

We can at least say that the learning methodology appears to be capable of achieving the technical result we were looking for. But it also might mean something a bit different: that there is not much more interesting going on in the original mind. In this radical corner sits the idea that cognitive processes in people are tactical responses left over from early human evolution. All you can learn from them is that they may be biased and tilted towards that early human condition, but beyond that things just are the way they turned out.

If we take this position, then, we might have to discard certain aspects of the social sciences.… Read the rest