Most artificial intelligence researchers think unlikely the notion that a robot apocalypse or some kind of technological singularity is coming anytime soon. I’ve said as much, too. Guessing about the likelihood of distant futures is fraught with uncertainty; current trends are almost impossible to extrapolate.

Most artificial intelligence researchers think unlikely the notion that a robot apocalypse or some kind of technological singularity is coming anytime soon. I’ve said as much, too. Guessing about the likelihood of distant futures is fraught with uncertainty; current trends are almost impossible to extrapolate.

But if we must, what are the best ways for guessing about the future? In the late 1950s the Delphi method was developed. Get a group of experts on a given topic and have them answer questions anonymously. Then iteratively publish back the group results and ask for feedback and revisions. Similar methods have been developed for face-to-face group decision making, like Kevin O’Connor’s approach to generating ideas in The Map of Innovation: generate ideas and give participants votes equaling a third of the number of unique ideas. Keep iterating until there is a consensus. More broadly, such methods are called “nominal group techniques.”

Most recently, the notion of prediction markets has been applied to internal and external decision making. In prediction markets, a similar voting strategy is used but based on either fake or real money, forcing participants towards a risk-averse allocation of assets.

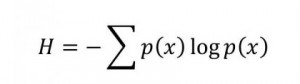

Interestingly, we know that optimal inference based on past experience can be codified using algorithmic information theory, but the fundamental problem with any kind of probabilistic argument is that much change that we observe in society is non-linear with respect to its underlying drivers and that the signals needed are imperfect. As the mildly misanthropic Nassim Taleb pointed out in The Black Swan, the only place where prediction takes on smooth statistical regularity is in Las Vegas, which is why one shouldn’t bother to gamble.… Read the rest

Research can flow into interesting little eddies that cohere into larger circulations that become transformative phase shifts. That happened to me this morning between a morning drive in the Northern California hills and departing for lunch at one of our favorite restaurants in Danville.

Research can flow into interesting little eddies that cohere into larger circulations that become transformative phase shifts. That happened to me this morning between a morning drive in the Northern California hills and departing for lunch at one of our favorite restaurants in Danville.