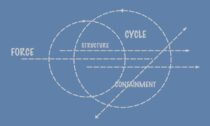

There is a little corner of philosophical inquiry that asks whether knowledge is justified based on all our other knowledge. This epistemological foundationalism rests on the concept that if we keep finding justifications for things we can literally get to the bottom of it all. So, for instance, if we ask why we think there is a planet called Earth, we can find reasons for that belief that go beyond just “’cause I know!” like “I sense the ground beneath my feet” and “I’ve learned empirically-verified facts about the planet during my education that have been validated by space missions.” Then, in turn, we need to justify the idea that empiricism is a valid way of attaining knowledge with something like, “It’s shown to be reliable over time.” This idea of reliability is certainly changing and variable, however, since scientific insights and theories have varied, depending on the domain in question and timeframe. And why should we in fact value our senses as being reliable (or mostly reliable) given what we know about hallucinations, apophenia, and optical illusions?

There is also a curious argument in philosophy that parallels this skepticism about the reliability of our perceptions, reason, and the “warrants” for our beliefs called the Evolutionary Argument Against Naturalism (EAAN). I’ve previously discussed some aspects of EAAN, but it is, amazingly, still discussed in academic circles. In a nutshell it asserts that our reliable reasoning can’t be evolved because evolution does not reliably deliver good, truthful ways of thinking about the world.

While it may seem obvious that the evolutionary algorithm does not deliver or guarantee completely reliable facilities for discerning true things from false things, the notion of epistemological pragmatism is a direct parallel to evolutionary search (as Fitelson and Sober hint).… Read the rest