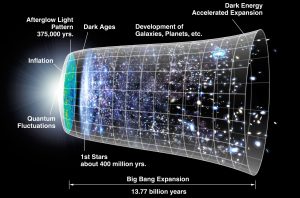

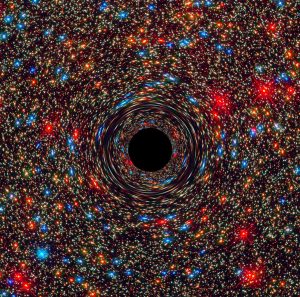

If we think about the evolution of living things we generally start from the idea that evolution requires replicators, variation, and selection. But what if we loosened that up to the more everyday semantics of the word “evolution” when we talk about the evolution of galaxies or of societies or of crystals? Each changes, grows, contracts, and has some kind of persistence that is mediated by a range of internal and external forces. For crystals, the availability of heat and access to the necessary chemicals is key. For galaxies, elements and gravity and nuclear forces are paramount. In societies, technological invention and social revolution overlay the human replicators and their biological evolution. Should we make a leap and just declare that there is some kind of impetus or law to the universe such that when there are composable subsystems and composition constraints, there will be an exploration of the allowed state space for composition? Does this add to our understanding of the universe?

Wong, et. al. say exactly that in “On the roles of function and selection in evolving systems” in PNAS. The paper reminds me of the various efforts to explain genetic information growth given raw conceptions of entropy and, indeed, some of those papers appear in the cites. It was once considered an intriguing problem how organisms become increasingly complex in the face of, well, the grinding dissolution of entropy. It wasn’t really that hard for most scientists: Earth receives an enormous load of solar energy that supports the push of informational systems towards negentropy. But, to the earlier point about composability and constraints, the energy is in a proportion that supports the persistence of systems that are complex.… Read the rest