Fukuyama’s suggestion is intriguing but needs further development and empirical support before it can be considered more than a hypothesis. To be mildly repetitive, ideology derived from scientific theories should be subject to even more scrutiny than religious-political ideologies if for no other reason than it can be. But in order to drill down into the questions surrounding how reciprocal altruism might enable the evolution of linguistic and mental abstractions, we need to simplify the problems down to basics, then work outward.

Fukuyama’s suggestion is intriguing but needs further development and empirical support before it can be considered more than a hypothesis. To be mildly repetitive, ideology derived from scientific theories should be subject to even more scrutiny than religious-political ideologies if for no other reason than it can be. But in order to drill down into the questions surrounding how reciprocal altruism might enable the evolution of linguistic and mental abstractions, we need to simplify the problems down to basics, then work outward.

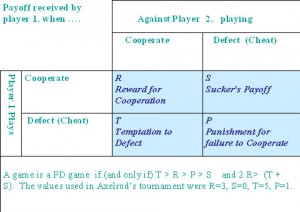

So let’s start with reciprocal altruism as a mere mathematical game. The iterated prisoner’s dilemma is a case study: you and a compatriot are accused of a heinous crime and put in separate rooms. If you deny involvement and so does your friend you will each get 3 years prison. If you admit to the crime and so does your friend you will both get 1 year (cooperation behavior). But if you or your co-conspirator deny involvement while fingering the other, one gets to walk free while the other gets 6 years (defection strategy). Joint fingering is equivalent to two denials at 3 years since the evidence is equivocal. What does one do as a “rational actor” in order to minimize penalization? The only solution is to betray your friend while denying involvement (deny, deny, deny): you get either 3 years (assuming he also denies involvement), or you walk (he denies), or he fingers you also which is the same as dual denials at 3 years each. The average years served are 1/3*3 + 1/3*0 + 1/3*3 = 3 years versus 1/2*1 + 1/2*6 = 3.5 years for admitting to the crime.

In other words it doesn’t pay to cooperate.… Read the rest