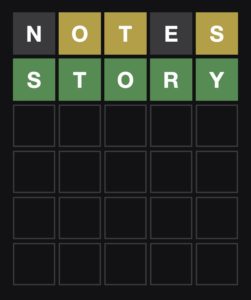

I occasionally do Wordles at the New York Times. If you are not familiar, the game is very simple. You have six chances to guess a five-letter word. When you make a guess, letters that are in the correct position turn green. Letters that are in the word but in the wrong position turn yellow. The mental process for solving them is best optimized by choosing a word initially that has high-frequency English letters, like “notes,” and then proceeding from there. At some point in the guessing process, one is confronted with anchoring known letters and trying to remember words that might fit the sequence. There is a handy virtual keyboard displayed below the word matrix that shows you the letters in black, yellow, green, and gray that you have tried, that are required, that are fit to position, and that remain untested, respectively. After a bit, you start to apply little algorithms and exclusionary rules to the process: What if I anchor an S at the beginning? There are no five-letter words that end in “yi” in English, etc. There is a feeling of working through these mental strategies and even a feeling of green and yellow as signposts along the way.

I decided this morning to write the simplest one-line Wordle helper I could and solved the puzzle in two guesses:

Sorry for the spoiler if you haven’t gotten to it yet! Here’s what I needed to do the job: a five letter word list for English and a word frequency list for English. I could have derived the first from the second but found the first first, here. The second required I log into Kaggle to get a good CSV searchable list.… Read the rest