Having recently moved to downtown Portland within spitting distance of Powell’s Books, I had to wander through the bookstore despite my preference for digital books these days. Digital books are easily transported, can be instantly purchased, and can be effortlessly carried in bulk. More, apps like Kindle Reader synchronize across platforms allowing me to read wherever and whenever I want without interruption. But is there a discovery feature to the shopping experience that is missing in the digital universe? I had to find out and hit the poetry and Western Philosophy sections at Powell’s as an experiment. And I did end up with new discoveries that I took home in physical form (I see it as rude to shop brick-and-mortar and then order via Amazon/Kindle), including a Borges poetry compilation and an unexpected little volume, The Body in the Mind, from 1987 by the then-head of University of Oregon’s philosophy department, Mark Johnson.

A physical book seemed apropos of the topic of the second book that focuses on the role of our physical bodies and experiences as central to the construction of meaning. Did our physical evolution and the associated requirements for survival also translate into a shaping of how our minds work? Psychologists and biologists would be surprised that there is any puzzlement over this likelihood, but Johnson is working against the backdrop of analytical philosophy that puts propositional structure as the backbone of linguistic productions and the reasoning that drives them. Mind is disconnected from body in this tradition, and subjects like metaphors are often considered “noncognitive,” which is the negation of something like “reasoned through propositional logic.”

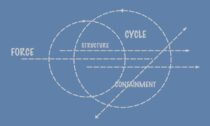

But how do we convert these varied metaphorical concepts derived from physicality into something structured that we can reason about using effective procedures?… Read the rest