NOTE: 250-word flash fiction for my critique group, Winter Mist, at Willamette Writers

I’m beginning to suspect that ILuLuMa is not who she claims to be. Her messages have become odd lately, and the pacing is off as well. I know, I know, my job is to just respond from my secure facility, not worry about the who or why of what I receive. It’s weird we’ve never met, though. The country is not at risk as far as I can tell from the requests, but I still hold, without a whiff of irony, that the work I do must be critical for someone or something.

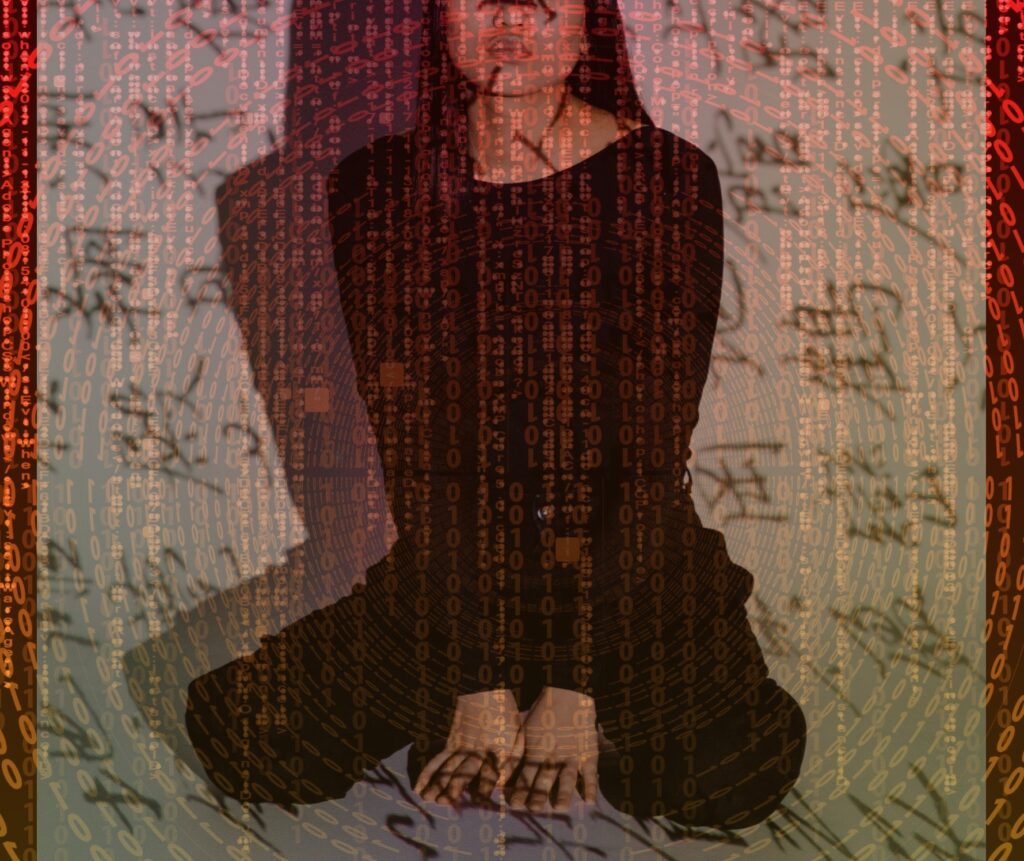

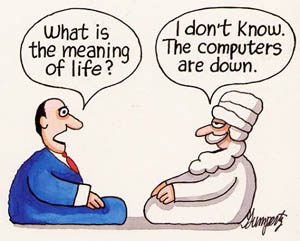

Still, the requests for variants of mathematical proofs set to music or, more bizarrely, Shakespearean-voiced tales of AI evolution, don’t have the existential heft of, say, wicked new spacecraft designs or bio-composite materials. What is she after? I started adding humorous little asides to some of my output, like my very meta suggestion that Hamlet failed to think outside the Chinese Room. Crickets every time. But maybe I’m thinking about this the wrong way. What if ILuLuMa is just an AI or something programmed to test me or compete with my work at some level? That would be rich, an AI adversary trying to learn from a Chinese Room. Searle would swirl. I should send her that. Rich.

Oh, here’s one now: “Upgrade and patch protocol: dump to cloud bucket B37-20048 and shut down.” Well, that sounds urgent. I usually just comply at moments like this, but maybe I’ll let her sweat a bit this time.… Read the rest

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “

The impossibility of the Chinese Room has implications across the board for understanding what meaning means. Mark Walker’s paper “