From complaints about student protests over Israel in Gaza, to the morality of new House Speaker Johnson, and even to the reality and consequences of economic inequality, there is a dynamic conversation in the media over what is morally right and, importantly, why it should be considered right. It’s perfectly normal for those discussions and considered monologues to present ideas, cases, and weigh the consequences to American life, power, and the well-being of people around the world. It also demonstrates the fact that ideas like divine command theory become irrelevant for most if not all of these discussions since they still require secular analysis and resolution. Contributions from the Abrahamic faiths (and similarly from Hindu nationalism) are largely objectionable moral ideas (“The Chosen People,” jihad, anti-woman, etc.) that are inherently preferential and exclusionary.

From complaints about student protests over Israel in Gaza, to the morality of new House Speaker Johnson, and even to the reality and consequences of economic inequality, there is a dynamic conversation in the media over what is morally right and, importantly, why it should be considered right. It’s perfectly normal for those discussions and considered monologues to present ideas, cases, and weigh the consequences to American life, power, and the well-being of people around the world. It also demonstrates the fact that ideas like divine command theory become irrelevant for most if not all of these discussions since they still require secular analysis and resolution. Contributions from the Abrahamic faiths (and similarly from Hindu nationalism) are largely objectionable moral ideas (“The Chosen People,” jihad, anti-woman, etc.) that are inherently preferential and exclusionary.

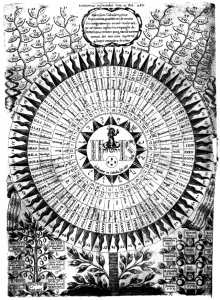

Indeed, this public dialogue perhaps best shows how modern people build ethical systems. It looks mostly like Rawl’s concept of “reflective equilibrium” with dashes of utilitarianism and occasional influences from religious tradition and sentiment. And reflective equilibrium has few foundational ideas beyond a basic commitment to fairness as justice using the “original position” as its starting point. That is, if we had to create a society with no advance knowledge about what our role and position might be within it (a veil of ignorance), the best for us would be to create an equal, fair, and just society.

So ethics is cognitively rubbery, with changing attachments and valences as we process options into a coherent whole. We might justify civilian deaths for a greater good when we have few options, imprecise weapons, and existential fear (say, the atom bomb in World War II).… Read the rest