LukeProg of CommonSenseAtheism fame created a bit of a row when he declared that Solomonoff Induction largely rules out theism, continuing on to expand on the theme:

If I want to pull somebody away from magical thinking, I don’t need to mention atheism. Instead, I teach them Kolmogorov complexity and Bayesian updating. I show them the many ways our minds trick us. I show them the detailed neuroscience of human decision-making. I show them that we can see (in the brain) a behavior being selected up to 10 seconds before a person is consciously aware of ‘making’ that decision. I explain timelessness.

There were several reasons for the CSA community to get riled up about these statements and they took on several different forms:

- The focus on Solomonoff Induction/Kolmogorov Complexity is obscurantist in using radical technical terminology.

- The author is ignoring deductive arguments that support theist claims.

- The author has joined a cult.

- Inductive claims based on Solomonoff/Kolmogorov are no different from Reasoning to the Best Explanation.

I think all of these critiques are partially valid, though I don’t think there are any good reasons for thinking theism is true, but the fourth one (which I contributed) was a personal realization for me. Though I have been fascinated with the topics related to Kolmogorov since the early 90s, I don’t think they are directly applicable to the topic of theism/atheism. Whether we are discussing the historical validity of Biblical claims or the logical consistency of extensions to notions of omnipotence or omniscience, I can’t think of a way that these highly mathematical concepts have direct application.

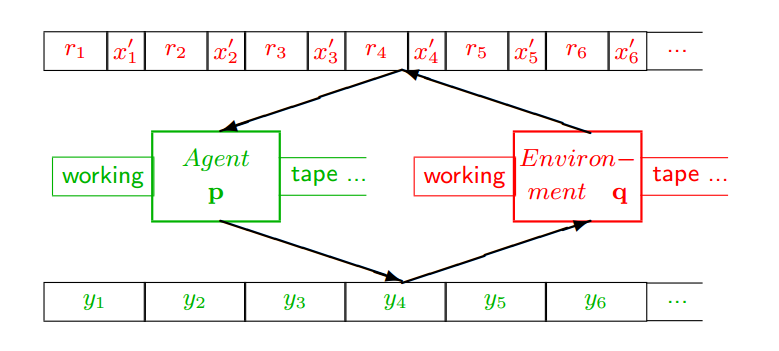

But what are we talking about? Solomonoff Induction, Kolmogorov Complexity, Minimum Description Length, Algorithmic Information Theory, and related ideas are formalizations of the idea of William of Occam (variously Ockham) known as Occam’s Razor that given multiple explanations of a given phenomena, one should prefer the simpler explanation.… Read the rest

The most interesting thing I’ve read this week comes from Jurgen Schmidhuber’s paper, Algorithmic Theories of Everything, which should be provocative enough to pique the most jaded of interests. And the quote is from way into the paper:

The most interesting thing I’ve read this week comes from Jurgen Schmidhuber’s paper, Algorithmic Theories of Everything, which should be provocative enough to pique the most jaded of interests. And the quote is from way into the paper: